Generative deep learning (part 1/2)

Ch 8 is the last chapter with practical applications in Deep Learning with Python (DLP). It includes 5 examples to showcase how DL can be used for creativity purposes. This post goes over the first 3 examples.

Ex 1: Text Generation

The first example trains a DL model on some writings of Nietzsche and then creates Nietzsche-style texts on its own. Basically, the model predicts the next token using the previous tokens as input. This type of model is called language model.

Here, token is defined as a character. For instance, after seeing “I have a ca”, the model will try to predict the next character, which can be ‘n’, ‘r’, ‘t’, etc. Each of these characters is associated with a probability. Therefore, the output layer of our model is a softmax function over all the possible characters. Also, one-hot encoding of characters instead of words is used. We call this model character-level neural language model.

Our language model uses stochastic sampling to pick the next character. It means the model randomly samples the character from the probability distribution of the next character. To control for the amount of randomness, we use a parameter called softmax temperature. A high temperature generates more surprising and interesting texts while a low temperature generates more realistic, predictable, but repetitive texts.

The texts generated by our model can be seen here. The Python script provided in the book has a mistake. I corrected this mistake and you can see the correction in my Python code.

Ex 2: DeepDream

Deep Dream is an artistic image modification technique. Its algorithm is similar to the one we used to visualize convnet filters in Ch 5.

In other words, it uses gradient ascent on the input to the convnet.

However, the two differ in three aspects. First, DeepDream tries to maximize activation of entire layers, not a specific filter. Second, DeepDream starts with an existing image, instead of a blank sheet. Third, input images in DeepDream are processed at different scales or octaves to improve the quality of visualization.

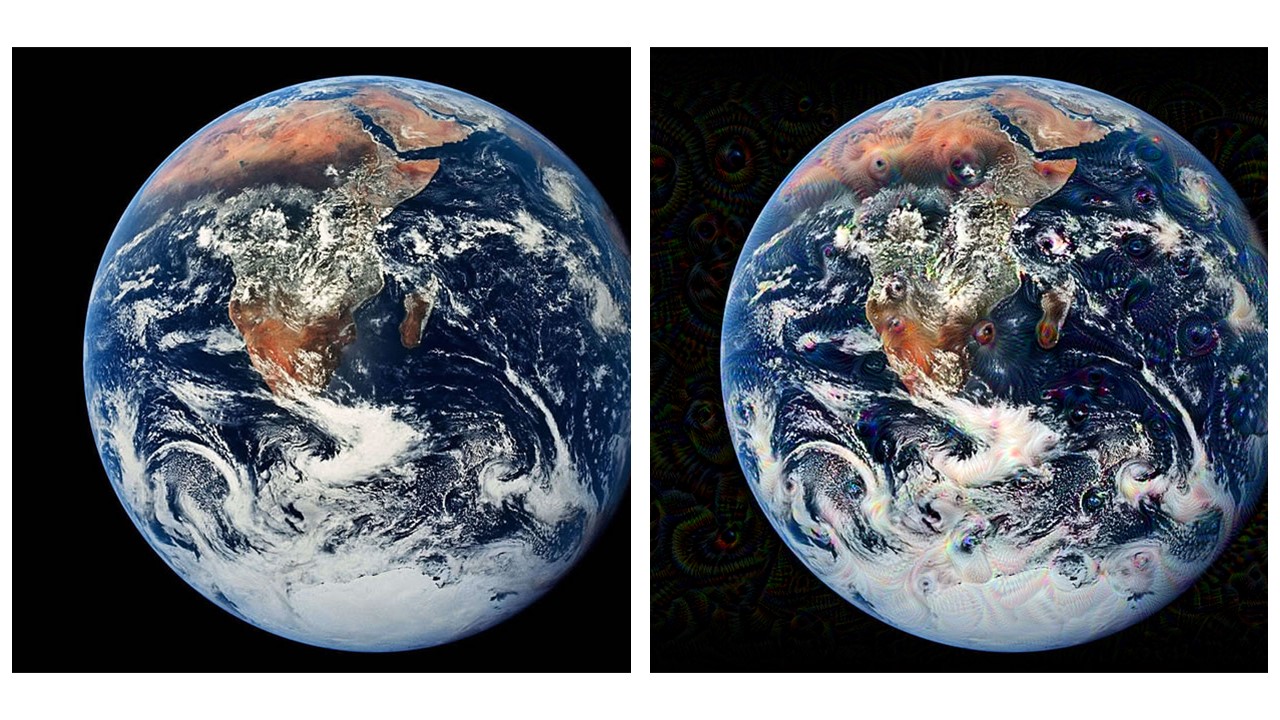

Here we applied higher layers of the Inception V3 model with pre-trained ImageNet weights to a picture of earth. Detailed Python code can be found here. The left image is the original picture of earth. The right image is the earth modified by DeepDream.

Ex 3: Neural Style Transfer

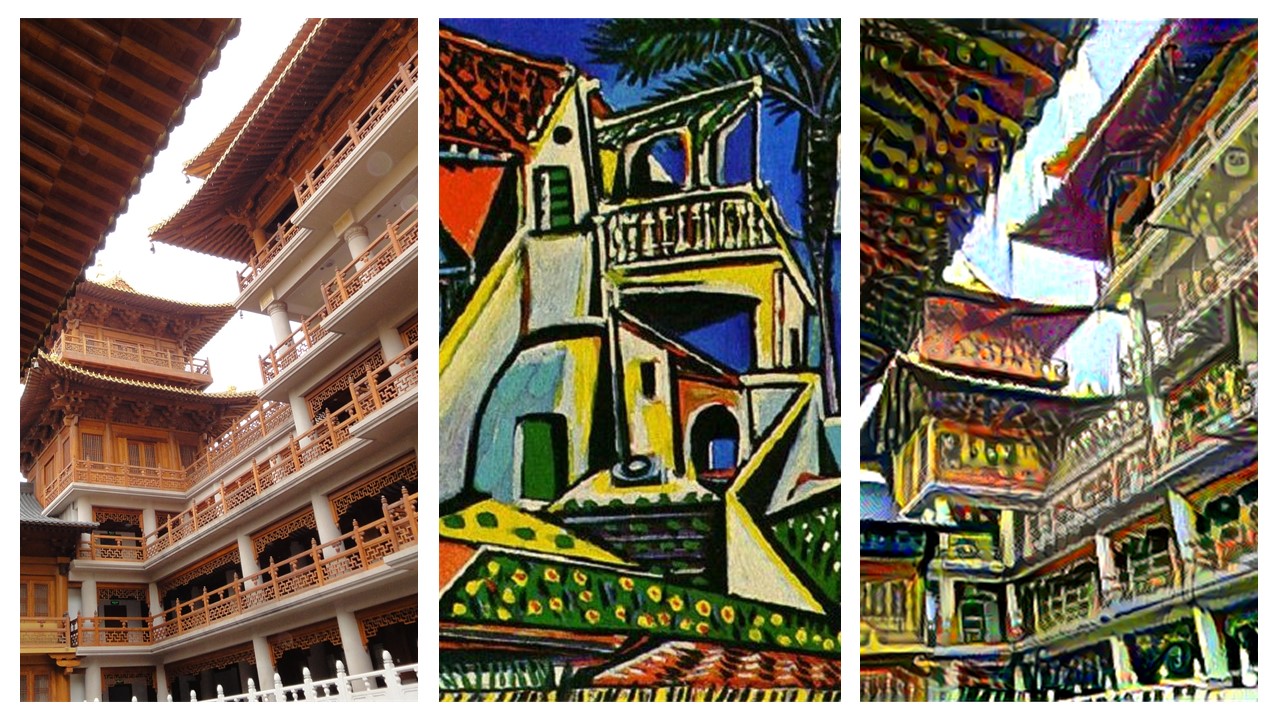

In this example, we apply the style of a reference image to the content of a target image. The figure below shows what we did: target image + style-reference image = generated image. See here for detailed Python code. This technique is called neural style transfer

Generally speaking, the algorithm consists of computing and minimizing a final loss function which is a weighted average of 3 specific losses. The 1st loss is content loss. It’s the L2 norm btw the activations of a pre-trained convnet (e.g., VGG19 here) upper layer computed over the target image, and the activations of the same layer computed over the generated image. This ensures the generated image looks similar to the target image.

The 2nd loss is style loss. It uses the Gram matrix of a layer’s activations, that is, the inner product of the feature maps of a given layer. Instead of using a single layer as in our content loss, the style loss is the L2 norm btw the Gram matrix of multiple layers computed over the style-reference image and the generated image. This ensures the textures of the generated image looks similar to the style-reference image.

The 3rd loss is total variation loss. It’s the L2 norm btw pixels of the generated image. This ensures the spatial continuity of the generated image and avoids overly pixelated results.